Autor:

Dr.Serhiy Shturkhetskyy, Chairman of the Independent Media Trade Union of Ukraine, PhD in Public Administration, Associate Professor (Ukraine)

The article is posted on the online course "Artificial intelligence as an instrument of civic education" https://online.eence.eu/

Artificial Intelligence (AI) can be a powerful tool for improving information literacy, developing critical thinking, and ensuring citizens' ability to discern reliable information in the digital age. AI-based tools have the potential to significantly improve information literacy and critical thinking in several ways:

1)developing critical thinking by providing users with different perspectives on a topic, forcing them to evaluate different viewpoints and sharpen their critical thinking[1]; 2) developing digital literacy skills by teaching digital literacy skills such as information retrieval, evaluation and synthesis [3]; 3) fostering digital citizenship by ensuring that users develop the skills of a responsible and informed digital citizen capable of distinguishing credible information sources from unreliable ones[2].

But apart from positive changes and new opportunities for the development of media literacy, AI tools have already become a serious challenge. Researchers point out that the rapid development of AI technologies and their penetration into the educational sphere raise ethical issues of creating and distributing digital content more acutely than ever before. "Artificial intelligence has changed the traditional ways of learning, so it requires information literacy. The ethical dimension of information literacy is fundamental to assessing the implications of artificial intelligence" [3].

Well, let's talk about how artificial intelligence has already changed our life and will change it in the aspect of information literacy, i.e. how AI can be used to improve information literacy.

Of course, the issue is very serious, because we understand all the threats to information security to which virtually all countries of the world are now exposed, especially Ukraine, Moldova, Georgia, Armenia and other countries of the Eastern Partnership. We are experiencing great changes in the information space, the spread of fake news and the dominance of propaganda.

As noted in the UNESCO report "ChatGPT has no ethical principles and cannot distinguish truth from bias or truth from fiction ("hallucinations"). Critical analysis and cross-referencing of other sources are essential when using its results" [1].

Actually, for AI there are no ethical notions of "truth" and "untruth", its product is just a generated text, without any ethical "colouring". This is what the UNESCO report says and this is what we should keep in mind when we talk about the use of AI in education in particular and content on the Internet in general. According to some estimates, the amount of AI-generated content on the Internet will reach 80-90 per cent of the total in just a few years. The amount of information generated is growing every second and while people are sleeping, walking, having fun, AI is generating, generating, generating... Tirelessly and without a break, and not only text, but also images and videos.

In this regard, we are interested in the position of search engine services, for example, such a large one as Google. The company's release from 8 February 2023 says: "The quality of content is much more important to us than how it is created. It is because of this that we have been providing users with reliable and high-quality information for many years. Artificial intelligence offers a new level of self-expression and creativity, and helps people create great content for the web" [4].

That is, Google has solved the ethical problem for itself in this way: as long as the standards on which Google content is based (experience, competence, authority and credibility) do not suffer, then the content generated by AI can be tolerated.

But of course, both large corporations and ordinary users are increasingly thinking about what needs to be done to prevent the quality of content from declining. Numerous studies in the field of legal regulation of such content are devoted to this, which is beyond the scope of our lecture. In the meantime, there is a recommendation to indicate (label) the content if AI was involved in its creation. We agree that this is very important, but it is obviously not enough to determine the reliability of the content. It is also important to note that if you used artificial intelligence to create a text, Google recommends that you indicate this, although it is not yet mandatory.

So how can artificial intelligence help in determining the validity of information, including that generated by AI? Probably, first of all, we should create the prerequisites for this by specially training AI. The AI itself does not "know" anything in the usual human sense, it just operates with the array of data put into it. If you put an array of data into the AI that tells about a flat Earth, the machine will take it as "truth" and will reproduce it whenever it is convenient. In other words, asking for the correctness of the data poses a challenge for those who train the AI to select data that is true. Even in technical sciences there are difficulties when it comes to the latest hypotheses that have not yet been tested, and let's talk about social sciences, where there are many different approaches to interpreting the same events.

A few AI tools to help you with your Google searches:

perplexity.ai - this service works like a CHATGP to which you can ask a question in any language and get an answer with links to sources. The service is online, does not require registration and is free.

chat.openai.com - ChatGPT will be your good partner in keyword research. Just write exactly what you need to find and the neural network will give you the relevant query.

chatpdf.com - quickly and easily analyses any PDF file. If you need to study a document in a foreign language, upload it and start asking questions in your language. The chatbot will provide answers based on the information in the file and will even show you the page number.

gpt4all.io - works without an internet connection. You can test yourself: ask questions about your own work: upload a text document and ask what your text is about.

Determining whether content is human-created or AI-created

Text

As we've already mentioned, just because content is created by AI, especially if we're talking about text, is no reason to consider the information fake. And images and videos can be illustrative, we do not call paintings or feature films fake. But, of course, if the same text was created with the use of AI, and the author is silent about it, it may also be a reason to conduct an additional check of this content. After all, if the content is ethical, why hide the subtleties of its creation?

In determining whether a text is written by a human or generated by AI, there are several tools based on the same AI. But the quality of these tools leaves much to be desired. Firstly, so far they work best with English-language resources and analyse English text. Secondly, they themselves indicate the accuracy of their diagnostics in the range from 26 to 80%, which is quite inaccurate for serious expertise.

Today there are several popular services for determining the originality (i.e. what is created by a human) of texts. The number and quality of these services is increasing day by day. One such service is GPT Detector [5].

If you are not sure that you have original "human" content in front of you, GPT Detector will help you figure it out. Copy and paste the text into the tool and click Check - the response will display the percentage of probability that the content was generated using GPT-3, GPT-4 or ChatGPT. Defines: GPT-3, GPT-4, and ChatGPT.

Figure 1. GPT Detector determined that the lyrics of the song "My way" written by Paul Anka for Frank Sinatra are only 2% similar to the generated content

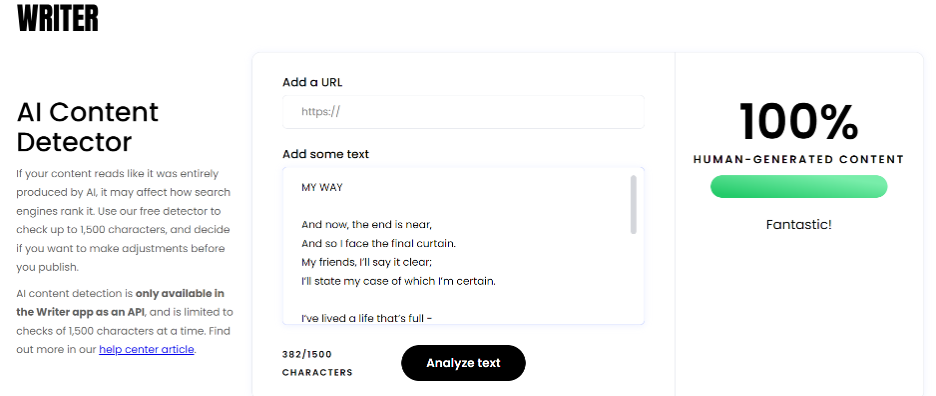

Other services can also help sort out the origin of content. AI Content Detector checks up to 1,500 characters [6]. Paste the content or a link to it into the appropriate fields and click Analyze text.

Figure 2. The AI Content Detector service determined with 100% certainty that the lyrics of the song "My way" were created by a human and also gave a rating of "Fantastic!".

Based on the described, we can formulate recommendations - how to identify content created by a neural network:

And yet - is it safe to use AI-generated texts? Probably, no more than it is safe to use human-generated texts. After all, along with useful and clear instructions, AI can give incorrect information based on its data. Besides, AI services (like Chat GPT) often do not perform fact-checking and do not provide data on sources.

Figure 3. In social networks of a Ukrainian TV channel in May 2023, a biographical sketch of writer Oles Gonchar was posted [7]. In this case, real archive photos from the writer's life were used, and the text was generated by AI with such inconsistencies with the historical truth that the TV channel had to apologise to the audience.

So the question of the accuracy of the generated texts is very acute. This is especially true for topics that fall into the YMYL (Your Money or Your Life) category - medicine, health, finance.

But we certainly won't limit ourselves to determining whether a text is created by a human or an AI. After all, just as humans can create fake news, AI can generate quite realistic and truthful texts. At least, ChatGPT generates kitchen recipes quite well)

To facilitate the work of journalists and to identify fake texts (especially English-language ones), the Factiverse service was created [8].

Factiverse is an AI-based project that journalists can use to speed up the fact-checking process. Based on research conducted at the University of Stavanger, the project includes an AI Editor that finds misinformation in content and suggests credible resources, the FactiSearch fact checking database and the Microfacts tool that helps find relevant context and explains jargon and professional terms.

Fig.4. Using the Factiverse service, we checked the information of the EENCE network about the launch of the Educational Caravan in Ukraine. The service determined that out of three analysed sentences the text does not contain statements that are disputable from the fact checking point of view.

For several years now, fact-checking services have been positively established, and they are now using various technologies, including AI, to verify the veracity of information.

Here are some of them:

Snopes: This is one of the oldest and best known fact-checking services that analyses urban legends, gossip and other claims to determine their veracity[9].

FactCheck.org: This independent service focuses on verifying politicians' statements and political ads in the United States[10].

PolitiFact: This is a project from the Tampa Bay Times that verifies the statements of US politicians using the "Truth-O-Meter" system[11].

BBC Reality Check: This service from the BBC analyses facts and statements in the news to help readers sort out the veracity of information[12].

Full Fact: This is a British independent organisation that fact-checks news stories and politicians' statements[13].

These services use a combination of traditional journalistic methods and modern technology, including AI, to verify and analyse information.

Image

A Vietnamese artist, better known as Ben Moran, got into trouble late last year when his cover for a fantasy novel was labelled by the moderators of a major art community as AI-created [14]. The artist eventually proved his case and provided evidence of the authenticity of the work, on which he spent more than 500 hours.

But it's not just artists who are now having headaches from AI "creativity." The availability of technology has allowed millions of users to fulfil their fantasies about famous personalities. One of the most memorable (and relatively harmless) fake images of 2023 was Pope Francis dressing up in a white puffer jacket.

Figure 5. The Pope's modern fashion ""look" garnered millions of rave reviews

The image quickly went viral [15] and only by some small details it was possible to determine that the picture was created by an AI service.

Figure 6. A detailed examination of the image shows that the character's hand is not clearly drawn, the crucifix lacks correct right angles, and the edge of the lens of the glasses goes to the shadow.

After this case and after the distribution of the photo of the arrest of former US President Donald Trump, the Midjourney service announced the transition to paid access [16], but, agree, the price of $10 monthly subscription can not be a serious barrier for those who really want to generate fakes. Still, the success of spreading such fakes lies not so much in the realism of the image (there are still many nuances that allow us to determine the image generated by AI), but in the sphere of social psychology. People want to see something expected. Or vice versa - something unusual. And are willing to believe the visualisation. Therefore, to determine whether the image in front of us is a fake (an AI-generated image is not real a priori), we will need good old-fashioned fact checking skills described earlier in many manuals [17] .

To determine whether the image in front of you is generated or not, the AI or NOT service has been created [18] . However, the service has some limitations related to the algorithm of its work. The point is that the service "knows" the drawing algorithms of different programmes and "reads" the machine algorithm from the image, including watermarks, tags and other possible specific features of the generated content that are invisible to the eye. But if we offer the service a screenshot or photo of the generated image for analysis, the service is lost - it can't read the algorithm, it can't see specific marks.

Figure 7. The service found it difficult to answer when we uploaded a popular image of Donald Trump's fake arrest (we took the image from a news site).

And yet there are still some shortcomings in AI algorithms, thanks to which it is possible to determine whether the image is fake or not. But you will have to do it manually, as the example above shows, there are not yet sufficiently developed AI-based services to identify fake images. Not yet.

Therefore, the main tool for checking photos and videos is still reverse image search in various systems: TinEye, Google Lens, Bing. Sometimes you have to search in Yandex as well, especially if you need to find information in the Russian-speaking segment. Often thanks to such a search one manages to find the original source or at least hints where to continue checking.

When analysing images presumably created by AI, the following recommendations can be given:

Deep Fakes

What are "deep fakes" ? They are manipulated video or audio recordings that look and sound realistic. These kinds of fakes are no longer as harmless as the Pope's new outfit, and in times of social upheaval and war, they can have unpredictable consequences.

Here, for example, is the fake Macron having fun during the protests in Paris [19], with the photos assembled into a photo collage, depicting something like a video.

Figure 8. Different photos of Macron "in action", created the effect of real participation of the French President in the riots in Paris

But even this case can be referred to as a prank rather than a serious discrediting of the politician. But the video with the fake Zelensky [20], made during attempts to hold the first negotiations in March 2022, caused little laughter. The Ukrainian authorities even had to warn that more and more such fakes might appear as negotiations with Russia are underway.

AI is also developing tools for identifying fake videos - probably the most difficult task for fact checkers both before and today.

Here are just a few of them.

Truepic [21] is a tool that allows journalists to verify the authenticity of visual content. Truepic Display finds the source and history information of any media file, and Truepic Lens finds the metadata of any file.

YouTube DataViewer [22]. YouTube DataViewer, created by Amnesty International, helps extract the metadata of any video posted on YouTube. It also allows users to find video thumbnails and do reverse image search.

Social Networks

Since each social network has a specific algorithm for information dissemination, even to search for information in them, you need to use separate tools for each social network. Because of the enormous amount of information being processed, of course, these tools use AI. AI is also increasingly being used to track down inappropriate content, which at the very beginning of the application of these technologies led to some even curious situations.

Figure 9. Specific vocabulary of chess players where they play "black" and "white" led to blocking of YouTube channels. As reported by dailymail.co.uk, the AI algorithm considered the frequent use of these words... racism[23].

As for social media analysis tools, OSoMe's Botometer® [24] is one such tool. Botometer is a project created by the Observatory of Social Media (OSoMe) at Indiana University. This tool scores Twitter accounts: 0 points means an account run by a human, and 5 points means a bot account. The tool also sorts bot types into categories: echo chamber, fake follower, financial bot, spam distributor, and others.

Hoaxy [25] is a mobile application that tracks the spread of misinformation on low-grade sites created by humans. This tool helps journalists to track the path of articles in the virtual world. It also assesses the likelihood that bots or automation were involved in the distribution of these articles.

Conclusion

Indeed, it is probably too early to draw any conclusions. We (and AI technologies) are only at the beginning of an interesting and unsafe competition between viruses and anti-viruses, shields and arrows. AI as a universal tool can be used both to generate fakes and to expose them. Everything depends on humans - how people perceive information, whether they are ready to protect themselves when disinformation and propaganda tricks become more sophisticated. It is difficult to make any predictions now, but we can say with certainty that such an important competence as media literacy is especially in demand in the XXI century.

References

2. How can you use AI to teach digital citizenship and literacy?

https://www.linkedin.com/advice/3/how-can-you-use-ai-teach-digital-citizenship

3. Ethical Aspects of Information Literacy in Artificial Intelligence

4. Правила Google Поиска в отношении контента, созданного искусственным интеллектом

https://developers.google.com/search/blog/2023/02/google-search-and-ai-content?hl=ru

5. https://x.writefull.com/gpt-detector

6. https://writer.com/ai-content-detector/

7. Новый канал опубликовал фейковую биографию Олеся Гончара и обвинил ChatGPT

9. https://www.snopes.com/ Snopes

10. https://www.factcheck.org/ FactCheck.org

11. https://www.politifact.com/ PolitiFact

12. https://www.bbc.com/news/reality_check

13. https://fullfact.org/ Full Fact

14. A Professional Artist Spent 100 Hours Working On This Book Cover Image, Only To Be Accused Of Using AI

https://www.buzzfeednews.com/article/chrisstokelwalker/art-subreddit-illustrator-ai-art-controversy

15. Daddy cool. Фото Папы Франциска в стильном пуховике Balenciaga сгенерировала Midjourney и это один из лучших нейромемов

https://itc.ua/news/foto-papy-frantsyska-v-pukhovyke-feyk/

16. Midjourney теперь генерирует картинки только за деньги: все "из-за Папы Римского"

17. Как распознать фейк

https://www.stopfake.org/ru/kak-raspoznat-fejk/

https://www.stopfake.org/en/how-to-identity-a-fake/

20. Зеленский попал в фейк

https://youtu.be/gP1-uu4g93g?si=ITOPeB2ol2jmX54o

22. https://citizenevidence.amnestyusa.org

24. https://botometer.osome.iu.edu/

25. https://play.google.com/store/apps/details?id=com.hoaxy&hl=uk&gl=US