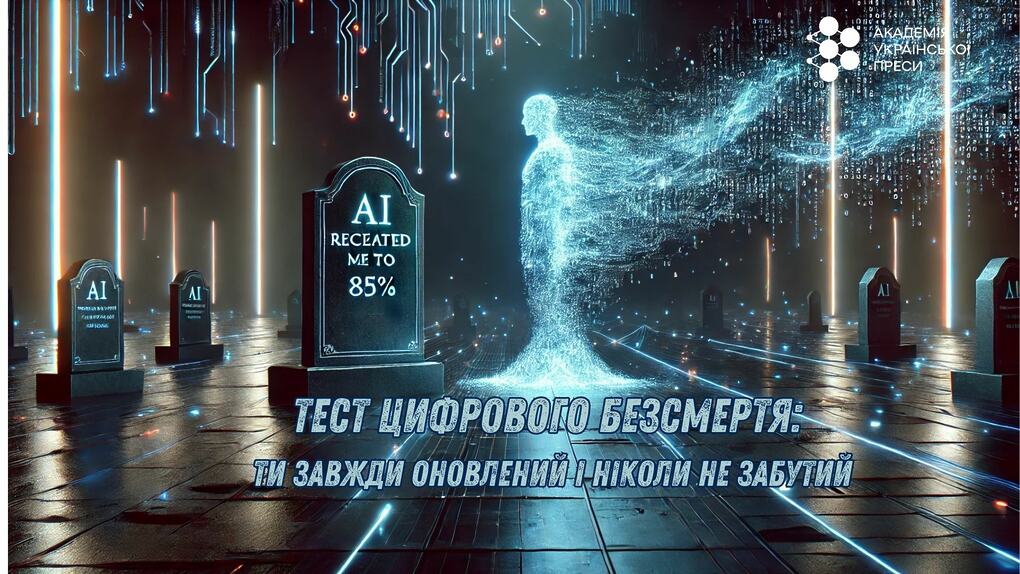

Imagine what your epitaph says: “It only took two hours for artificial intelligence to recreate me to 85 percent. Is it still me?”

If this scenario seems utopian or scary to you, it's no longer science fiction. This is how modern technology now works: artificial intelligence can already recreate your personality in a matter of hours, according to LiveScience. But, to be honest, I'm more interested in what is included in the 15% that they don't recreate? My love of black tea after 11 p.m. or my ability to always forget where I put my keys?

In this article about LLM (the previous six can be found on the resources of the Academy of Ukrainian Press), we will dive into the future of digital immortality. With humor and a little skepticism, we will look at what it means for us and whether we should safely go to another world, knowing that we will be “saved” in the cloud (though not completely).

The idea of appealing to “immortal” mentors has always existed. For example, the saints recognized by the church were the first “cultural avatars” to outlive their physical lives. We still read their texts, turn to them in prayer, seek advice in treatises, and listen to the interpretations of historians and theologians.

Imagine if we could talk to them through chatbots. For example, to ask St. Augustine for his opinion on contemporary ethical issues with AI or to ask Prince Yuri Dolgoruky whether it was really worthwhile to settle the swamps of moscovia.

Thanks to digitization technologies, this is becoming possible for those living today and for future generations. However, do not expect Taras Shevchenko to recommend investing in cryptocurrencies. And even if he does, will he remain an authoritative figure for you?

Humans can be copied: evidence and research

Imagine that in just two hours you can create a digital version of yourself - and no, this is not a prank with a cheap viral video. That's how long it takes for modern language models to learn your communication style, habits, and even your long-standing tradition of adding an unanimated old-fashioned “:)” emoticon at the end of every message.

This became possible as a result of research by Stanford University and Google, which outlined a simple but incredibly effective method of “copying” people. You sit down, have a conversation about your values, life priorities, strange habits, and even your rejected love. And then the magic happens: artificial intelligence uses this data to create your “digital twin” - so accurate that you won't even recognize yourself in a coffee line (or in a text message with your family).

This is where the fun lies. During the experiment, which involved 1,052 people, the researchers tested real participants twice: first immediately and then two weeks later. At the same time, their digital copies did the same. The result? In 85% of cases, the answers of artificial intelligence matched the real ones. Just imagine: you change your outlook on life due to life circumstances, and your AI original turns out to think the same way.

The methodology here is quite straightforward:

This approach opens up incredible opportunities for psychology, sociology, and even government planning. You can test new policies or social campaigns on “virtual people” instead of risking real resources and emotions. And let's not forget about marketing: future advertising strategies can be precisely tailored to your tastes, because your digital twin has already told you everything about it.

However, there are also pitfalls that should be kept in mind. LLMs still often stumble where a complex social context or “live” communication is required: in games, negotiations, sudden changes in conditions. In addition, let's not forget about ethics: the ability to manipulate people always grows in proportion to the development of such technologies. And if you ever hear from your digital twin that he or she likes classical opera instead of Netflix, it may be time for your artificial alter ego to have a frank conversation with you.

Are we ready to communicate with digital shadows

Let's face it, everyone has had a moment when they wanted to hear the voice of a deceased loved one once again. For example, to ask your grandfather how to fix an old watch, or to ask your grandmother for the recipe for her signature borscht, where the ingredients were measured “a little”, “by eye”, and “until it looks perfect”.

Modern technologies create not just a monument to memory, but an interactive “window” into the past. Platforms such as HereAfter (and similar ones in China) allow you to create digital avatars of your loved ones, using audio recordings, photos, messages, and videos to “revive” memories of the deceased.

In China, for example, entire digital memorials are already being created: virtual spaces for memories. You can leave voice messages there, view photos, or even “chat” with a replica of a loved one who responds based on the data collected. It's not just a platform for memories - it's a real bridge between the past and the present.

At the same time, psychologists warn that communication with “digital shadows” can have the opposite result. Instead of helping you get over the loss, it can complicate the process. How can you let go of a person if their voice is available 24/7, like a song on Spotify?

However, there are positive aspects. Think of it as a personal archive that not only preserves memories but also allows you to share them with future generations. Your great-grandchildren, for example, will be able to ask: “Great-grandmother, how did you survive the quarantine in 2020?” and get an emotional answer: “Back then, we bought buckwheat, washed our hands, wore masks, and thought it was the worst thing that could happen to Ukrainians.”

Ultimately, the question remains: where is the line between reality and imitation? When we talk to an avatar, do we remember that it is not a person but an algorithm with a database? And will we be able to “let go” of those who have passed away if their digital voices continue to resonate in our lives?

This raises difficult questions:

And if AI decides to “improve” your personality by adding more optimism, reducing complaints, and replacing your love of TV shows with a passion for classical opera, will you still be you?

It's one thing when your avatar helps keep warm memories alive in your family. But it's quite another when it starts voting in elections. Imagine the headlines: “Digital Maxim 5.0 supported the candidate whom the real Maxim could not stand!”

The future of digital immortality

In 50 years (or even sooner), we will see not only interactive avatars of ordinary people, but also digital “versions” of politicians, writers, or artists (which is already causing outrage among the 160,000-member SAG-AFTRA actors' union in Hollywood). Perhaps we will have a whole series of Netflix shows with digital shadows of well-known personalities telling about their adventures.

And the key question is: will we be able to accept this new way of being? Or will we doubt the authenticity of our digital copies? Jacek Dukaj's novel “The Old Axolotl” and Bob Johansson's “Bob's Universe” (I personally prefer the second one, although the first one is closer to reality) are not only about a technological breakthrough, but also about a philosophical rethinking of the challenges for the entire human civilization. We have always strived to leave a mark, but are we ready to become part of the endless digital cloud?

P.S. And if your digital version is ever reading this with you – hi! 😊

FAQ:

Can I turn off my digital avatar?

It depends on the platform. But would you want to do it? And would you give your avatar the right to do so?

Can my data be hacked?

Yes, just like any other data. Your digital copy may “break” or start behaving differently than you would expect. It can be modified, infected with a virus, or become incompatible with new software.

Summary:

The text focuses on the future of “digital immortality”, when artificial intelligence can create digital copies of people. The author considers how this affects the memory of the dead, the relationship with their “digital shadows” and what ethical and philosophical questions it raises. The author also covers the topics of data ownership, the authenticity of digital avatars, and the potential danger of losing control over them.

#LLM