In the world of artificial intelligence, where machine learning and language models are becoming more and more common, questions arise that make us think about the limits of our technological progress. One of these questions is why language models like ChatGPT or Gemini are so cautious when it comes to the phrase “I don't know”? Is it a technical flaw, or could it be indicative of something more?

Imagine that you are talking to the smartest machine on the planet. It is capable of generating texts, writing codes, translating languages, and answering any question you ask. But when you ask it something complex or ambiguous, instead of an honest “I don't know” answer, it tries to make up something that sounds believable. It's not just a mistake, it's an attempt by the machine to refuse to recognize its own limitations.

Language models are trained on huge amounts of data - books, articles, websites. They have no knowledge of their own in the classical sense, but only find statistical patterns between words and phrases. When faced with uncertainty, their “natural reaction” is to try to fill in the gap, even if it means creating potentially false information. It's as if a machine is afraid to be silent, because silence can look like weakness or failure.

The heart of every language model beats with the task of not just having information, but being able to process it and generate meaningful answers. However, when a model is faced with a question to which it does not have an unambiguous answer, a dilemma arises: how to recognize this and does it pose a risk to its mission of existence?

This causes an internal conflict in model programming. On the one hand, the desire for accuracy and usefulness, on the other hand, the need to avoid mistakes. The result? Often, instead of an honest “I don't know,” the model generates something that sounds convincing but may be completely false. This is not just a technical defect - it is an ethical choice that has raised serious questions for language model developers.

How the problem is being solved

Language model developers are actively looking for ways to make the model recognize its limitations without losing its usefulness. Here are a few approaches that are already in use or under development:

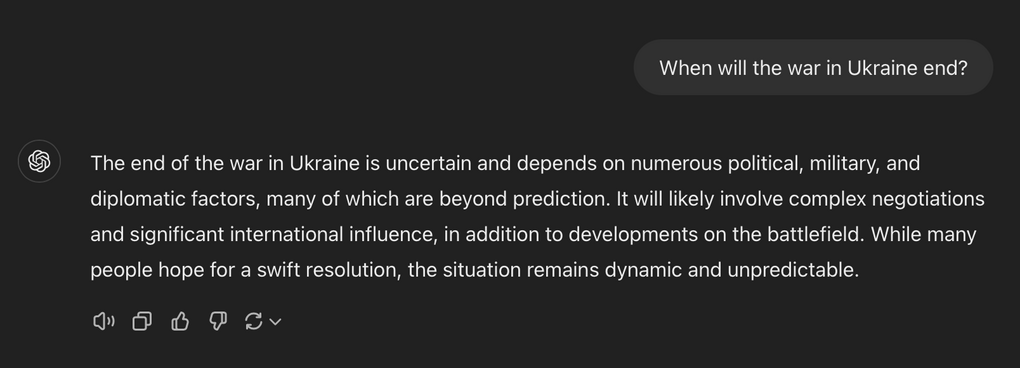

Example: When will the war in Ukraine end?

These methods should help the average user interact with language models in a transparent and reliable way, but they still leave a lot of room for disappointment.

Or maybe it's better not to know?

This is a question about the moral obligations of the technologies we create. Should a machine admit its unawareness? Or is it better to leave room for creativity and try to come up with an answer, even if it might be wrong?

On the one hand, honesty is the basis of trust. If the model admits that it does not know the answer, it increases the trust of users in the system. They realize that the technology is not omniscient and has its limitations.

On the other hand, being overly cautious can make the system less useful. Users can lose trust in a system if it frequently refuses to answer, even simple questions. This creates a dilemma between accuracy and usefulness.

Do we need models who are afraid to tell the truth?

Imagine a machine that is afraid to admit its ignorance. It’s like a doctor hesitating to tell a patient that they don’t know the best treatment for a specific condition. Such behavior fosters an atmosphere of mistrust and can lead to critical mistakes.

Machines lack emotions, but their actions and responses profoundly impact the emotional state of users. When a model generates false information, it can cause frustration, anger, or even fear. This highlights the necessity of developing technologies that are not only intelligent but also responsible.

The emergence of language models has shown that this is not merely a technical advancement but also an ethical challenge that accompanies progress. With each new generation of language models, we move closer to creating intelligent systems capable of deeper understanding and interaction with humans. However, this progress brings questions that demand careful consideration.

If we continue to ignore the need for acknowledging uncertainty, we risk developing systems that cause harm instead of providing value. False or inaccurate responses can lead to severe consequences, particularly in critical areas such as medicine, law, or education.

We are at the brink of a new era in artificial intelligence development. Language models are becoming increasingly integrated into our daily lives, assisting with complex tasks and providing access to information. But are we ready for our technologies to be honest about their limitations—or about us?

Honesty is not just a technical feature but a moral value that lies at the core of human progress. If we can find a way for our language models to openly acknowledge their limitations, we will create more reliable, ethical, and beneficial technologies for the future.

But the future also depends on our ability to address these ethical dilemmas today. Can we create machines and programs that not only generate information but also respond with responsibility and honesty? This is a question each of us must ask, as it will shape the direction of technological advancements that are transforming our world.

#LLM